How does a (senior) product and UX designer use AI? What's the best tool for each situation? These are questions designers and business owners ask me more and more. Here's my answer.

Recently, I spoke with a potential client who works in the field of serious games. He was pretty proud to mention that certain supportive images in his latest game were created using AI.

He then asked me a question I hear more and more when talking to founders and business owners: "What are your thoughts on using AI to design? And how do you use it?"

Seeing that pattern appear more clearly made me think. I use quite a bit of AI as I work on product design projects, but why? And how? I should really write it down somewhere!

Here's the result.

Let's talk about vision and mindset for AI in product design as two individual things. The vision is my big picture, and the mindset is how I apply that vision in my day-to-day work as a product designer.

For me, excellent product design and development is all about human-driven quality first. That might sound strange given how we mostly use AI to automate and speed up work, but bear with me. It'll make sense in a bit.

In an AI-driven world, where the 'middle of the road' becomes wider every day, I maintain a very high bar for quality and design standards; stand-out product design helps my clients succeed.

In practice, it means the following.

Mindset plays a massive role here. Learning something new or fixing problems used to involve buying books, attending live courses, and reading documentation. Today, you take a screenshot, put it into Claude, brainstorm, and figure it out.

One thing that will set designers apart is their design knowledge. You'll need it to validate any type of design-related AI output. If not, we're just guessing and 'vibing.'

On the design theory and knowledge front, I've been exposed to design since I was six years old. I've been tinkering ever since. Later in life and school, I learned classic design principles to add more understanding to that design gut feeling I already had. These principles include Gestalt psychology, color theory, typography fundamentals, and more.

This is quite the opposite to today's new design generation, where many designers grow up with AI from the start. As a result, they lack the foundational design knowledge I mentioned earlier.

One could argue that they no longer need it ("AI is going to be that good"), but I don't think that's the case. You need to be able to validate AI output to check its quality and how (if) it fits the user flow, client branding, and overall design goals of the project.

Because of my early exposure to design, I have developed a natural eye for it. This foundation helps me validate AI output in ways that someone without that background might miss. I know what good design looks like, and I will notice when AI gets it wrong.

Alright. Now that my vision and mindset are in place, it is time to look at the three main categories of my product design workflow where AI plays a significant role.

In addition to my natural design experience, I have a background in HTML, CSS, and JavaScript. It was a class during my time in university, and it has helped me a lot today, especially when delivering design in code is part of my project scope.

Just before AI became as mainstream as it is today, I started learning TailwindCSS, as I noticed more clients discussing it. I did this the old-school way; read documentation and watch YouTube videos. This is fine, of course, but it took a while.

In today's AI era, I have learned enough Laravel and React to work on actual projects and deliver production-ready code. That coding background I mentioned helps, but AI plays a big role, too. But more on that later.

Depending on the project, I now deliver my product design work in HTML, (Tailwind)CSS, JavaScript, Laravel (Blade), Bootstrap, and even React.

Projects include Resub's website and application (Laravel), the Leadsie application (React), and this very website, which is custom-built using HTML and TailwindCSS.

Using AI to speed up repetitive work around data, numbers, and copy is a perfect use case. Funny enough, this is an area where that 'learn first, speed second' vision I mentioned doesn't fully apply. Let's look at an example.

For one of my from-to design projects, I converted a full Figma design into HTML and TailwindCSS code. My first step is to set up colors and a desktop version of the home page. This is mostly manual work, although I suspect AI can be of (some) help here through a Figma-Claude MCP connection in the near future.

Once that's done, I use the HTML of the home page as input and work with Claude to add the correct font size, line height, and weights to all text elements for all breakpoints.

For this project, I've helped an Austria-based development company turn their existing Figma design into an HTML and TailwindCSS website.

Just last year, this would have taken me hours to do manually. Using Claude, I was able to finish this step of the project much faster.

That 'design eye' and my pre-existing knowledge of HTML, CSS, and JS helped a lot here, too. Because of it, I was able to validate Claude's output and check the quality of the work. Whenever I spotted a design issue, I was able to fix it on the go without having to go back to the design agency that created the initial Figma design.

Next to being a product designer, I've always been a builder. Most of my favorite games are strategy games or city builders, where optimising a part of your 'build' makes me very excited.

That excitement also applies to my work and how I approach things. For example, I've automated my 'podcast episode release flow' to post and share the episode's URL in all relevant places without me touching anything. It is all Zapier and Claude-powered.

AI helps me with content, too. But again, not for speed as the main benefit. Speed is only a secondary benefit. I'll show you what I mean next.

AI-generated content ("AI slop") is super easy to spot when it is just copy-pasted online. At the same time, AI can help with content generation. What you need is a set of guidelines.

To create that set of writing guidelines, I uploaded a collection of articles I had written by hand over the years for Claude to analyze and develop writing guidelines. I then refined those guidelines and added my own. For example, I do not like formal grammar structures or over-the-top heading copy. 'Default AI output' contains both, and it doesn't fit my personal brand at all.

I use these guidelines as input whenever I ask Claude to clean up my dictation or generate a post draft. The guidelines are always a work in progress, and I update them constantly.

By the way, the output is never just published. I proofread and edit every post to make it my own. It might not be as quick that way, but the foundation (and, consequently, the result) is of a significantly higher quality.

Using AI to support your product design work makes a lot of sense. I use it more and more myself, but not everywhere. There are situations where I leave AI out of my work on purpose.

Let's look at the onboarding flow of a medical startup first. The onboarding is critical for the success of any product, because your (new) users aren't that attached to you yet. One wrong move and they're off to a competitor. With that in mind, the onboarding experience has to be on point.

To impress users during onboarding and get them to a moment of activation as quickly as possible, I create a human-made flow of the highest quality. It includes hand-drawn (animated) visuals and choices made based on strategic design thinking. There's no AI involved in the design phase at all. The onboarding is just too important.

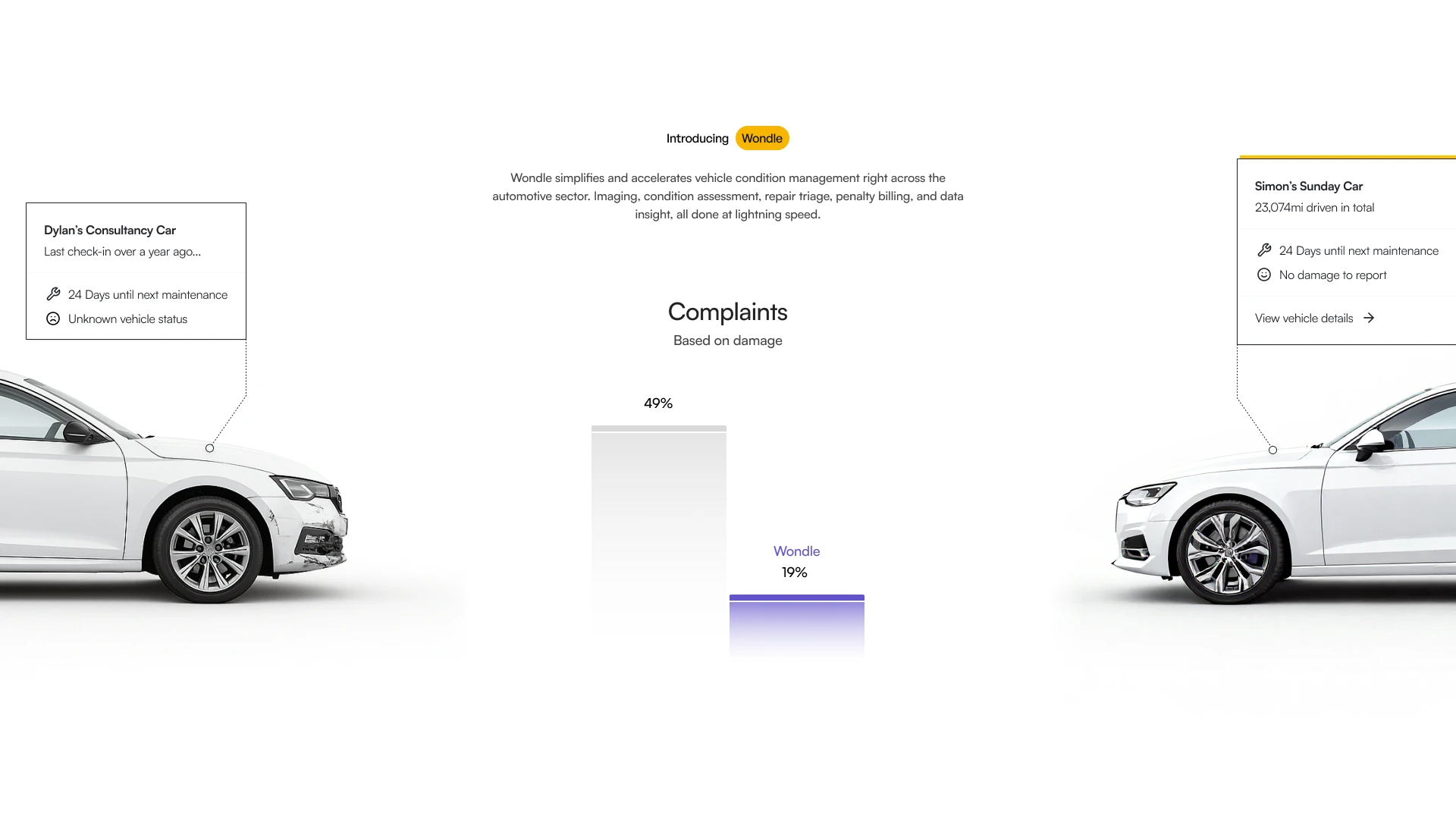

For a project in the automotive space, which deals with car damage assessment, I designed a landing page spread that compares before-and-after shots of a car with and without damage. Everything's hand-made except for the car damage visual. Tools like ChatGPT and Visual Electric have saved me a lot of time here.

See that car on the left? It has AI-generated damage on its front bumper. The rest of the page is human-made landing page design.

And finally, another medical project I'm involved with has a lot of video content where they weren't happy with the backgrounds. Based on input from discussions with my client, I've used Visual Electric to generate several better background images. Then, I used Claude's research mode to find out the most effective way to bulk-edit over 70 videos. It helped me edit each video within five minutes instead of the usual 10-15 minutes.

In this final example, I primarily use AI to save time (instead of the 'become better first' approach I usually use).

Let's go through what tools I actually use during my product design day-to-day. As a principle, I try to stay as lean as possible. I'd rather have fewer apps that do more than multiple small apps. That just becomes too crowded.

Claude is my primary AI tool. I use it to learn new frameworks, review code, and brainstorm ideas. I have also recently started using it as part of that Zapier workflow automation I mentioned above.

I use it to brainstorm content, too. This post is a perfect example. Writing about how I use AI had been on my mind for days. Here's how I went to work.

As a starting point, I recorded myself talking about AI and how I use it for over 20 minutes. I ran MacWhisper to get the transcript from my recording, which I used as input to have a Claude conversation to refine and structure my thoughts.

One thing I've learned from my many conversations with Arvid is to ask Claude to ask me questions. It helps me think, and it allows Claude to gain better context. My final step is to manually edit and refine Claude's output. That personal touch and human writing are super important and will always be a part of my writing.

Up next is image generation. I enjoy drawing icons and visuals by hand, but sometimes you need something generated. That's where Visual Electric comes in.

That automotive project I talked about is a good example, where an image of car damage is much easier to obtain when generated. Just a few years ago, I'd had to go and find a damaged car to photograph or rely on stock photos.

I found out image generation works well for a particular type of visual, but not for everything. At one point during the project, I had to use ChatGPT to clean up the Visual Electric output. It just wasn't quite right.

For note-taking during meetings, I use Granola. I'm currently on a trial version to see if and how it fits my workflow.

Granola transcribes your meetings, summarises the transcript, and lets you ask questions about your meetings. It is super helpful for kick-off meetings and brainstorm sessions. So far it works pretty well, but only for my English meetings.

As for my code editor, Cursor is slowly replacing Visual Studio Code in my workflow. Funny enough, out of all the cool things it does, Cursor's tab-to-complete is my favorite feature. As more of my clients switch, I want to be proficient with the tools they use, so I can deliver my design work in code or work directly on their codebase.

Zapier powers my automation. This isn't as much AI-powered as what I've shared so far, but it's still worth mentioning.

In the aforementioned podcast automation, Zapier connects Transistor to Claude, Webflow, and Slack. It helps me create a Webflow CMS item, write video descriptions, collect URLs, and more. It saves me a lot of time each week.

I'm planning to experiment more with Lovable. It appears to be making such great progress that it is becoming hard to ignore.

The only reason I haven't used it yet is that I haven't had a clear use case for it yet. As I mentioned before, I use AI to learn and improve on the go. I'm not using a tool just because. There has to be a clear and valid reason for it.

Next to Lovable, I might also try Midjourney or another image generator to see if it works better than Visual Electric.

UI is a big part of a product designer's day-to-day, and you might have noticed that I haven't mentioned Figma or anything UI-related (in a design tool) just yet.

That's on purpose. Why? The short answer is that AI tools for UI aren't good enough yet. They output generic 'middle of the road' UI and spaghetti code. It takes me more time to clean it up than it would have taken to design from scratch.

Tools will likely improve, which means I will have to improve as well (I love a challenge). But I doubt it will get good enough to ever fully replace this part of a product designer's job.

Speaking of…

No, it will not. AI will not replace product designers. Just based on my experience as a designer and business owner over the last few years, I've been busier with projects than ever. AI is mostly an under-the-hood productivity help without a high-quality frontend design output.

One thing I do notice, however, is that AI both lowers the entry point and raises the ceiling of what a designer can do.

I've had founders and project managers who gave me AI-generated mockups and wireframes as starting points for design tasks. That's a lower entry point right there. And as I mentioned in this post, AI helps me do better design work that I can deliver in code. It raises my level a lot!

With the speed of AI news and development in mind, this post will probably be the one I (have to) update the most. And that's fine! I enjoy testing AI-powered experiments in my day-to-day design work and then writing about them.

That's probably the biggest thing; if you're curious and focus on trying new things, AI isn't scary at all. Sure, not every experiment will stick around, but the few that do will improve your workflow in wonderful ways.

Do not let AI influencers (and their clickbait content) bring you down. AI is mostly great for any product designer.